Ardent Roleplay is a toolset for any Tabletop Role-Playing game. Here I’m going to discuss the overall development processes behind how we’re setting up tokens in Ardent Roleplay, as well as detailing some of our prototyping and iterations as we barrel towards our Q3 2018 release.

First things first, what are image targets?

The term “image target” represents the visual information that Vuforia, the AR engine, uses to track where a 3D asset is in the real world, and it operates as a pivot point for 3D models. For the player, this is the image target you find on the Ardent Roleplay tokens. So, by aiming your camera at the token, the system searches through a database and calls for the object that the target is connected to, and then displays that 3D object on top of the image target, bringing the world to life on your device.

Token design through iteration

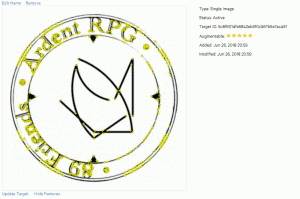

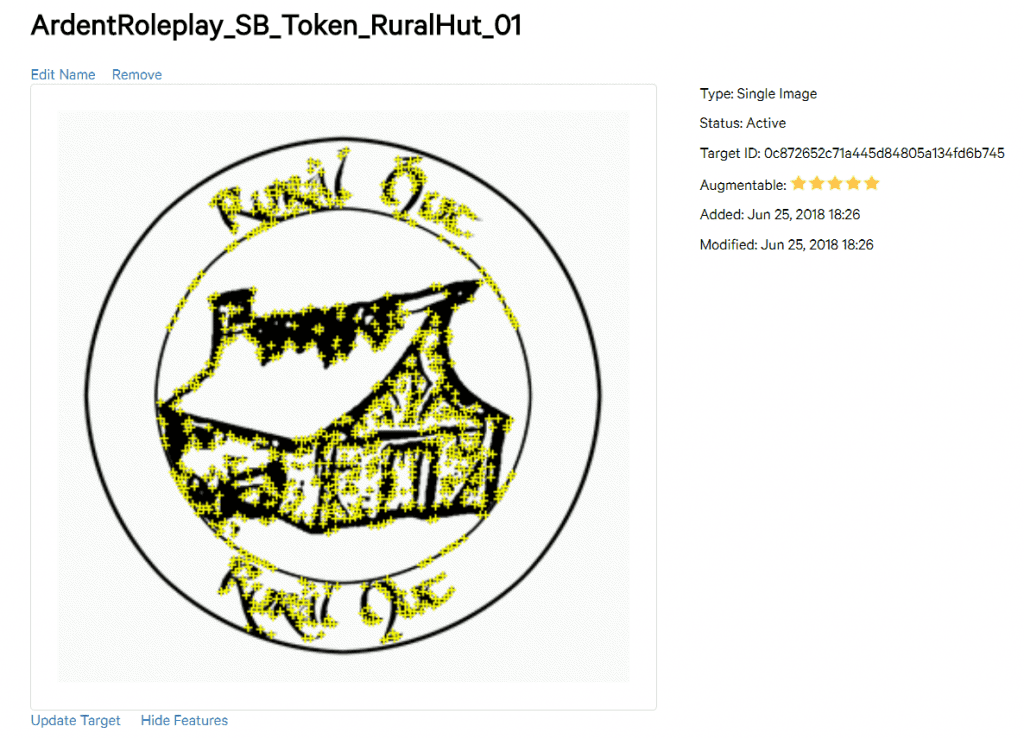

So, what next? We need to make tokens for Ardent Roleplay. To assist with testing and fast design iteration, we built a procedural system to build the inner designs, but we came across an issue. The inner image itself was being ignored by the Vuforia tracking algorithm.

The yellow marks on the image above represent points of information that Vuforia uses to track the marker, but while Vuforia rated this marker’s information highly, we found that it was using the wording around the edge of the marker as its primary recognition point, wording that we had on other markers, in some combination. This resulted in multiple 3D objects all being called at once.

How was it fixed?

It seems simple in retrospect… we added more image points to the target. Now, Vuforia recognises the internal image just as much as the outside so when Ardent Roleplay looks at an image target, it has clear data to connect with an object in the database.

But what about the size of the objects?

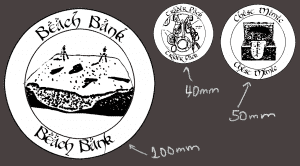

The data point issue was not the only one faced when designing image targets. Small-scale tokens wouldn’t work when you tried to view the entire table. Once less than 20% of the overall screen space displayed the image target, the model tracking would become unreliable or simply drop.

This unreliability is a pretty common theme with image target based AR and the most common ways to fix this type of issue is with either larger image targets or with more reliable image targets. So, we did both.

By boosting the stage markers (the markers for environmental dioramas) to a 100mm base, a greater range of motion and distance opened up without losing the target. The Character and Creature target markers were boosted to 50mm, to ensure they’re not too big to override the stage marker but enough to stay within the viewer’s window. And finally, the interactable items were reduced to 40mm as they’re only intended for a first-person viewing up close by individual players.

So, where are we currently? Our image targets in-house all work as intended, and allow for stable and effective use in-app. Our next step is to remove the text and have a solid scale where the targeting in Vuforia now identifies the Markers, and we are redesigning our image targets for consumers and playtesters.